Primer 3: The Role of Clinical Reasoning in Diagnostic Excellence

Summary

This is Part 3 of a three-part series of primers dedicated to diagnostic error and excellence. This primer focuses on the role of cognition in diagnostic excellence, with a discussion of cognitive errors and methods to detect and prevent them. Primer 2 explores system approaches to diagnostic excellence. Primer 1 focuses on foundational concepts in diagnostic error.

Clinical Reasoning in Diagnosis

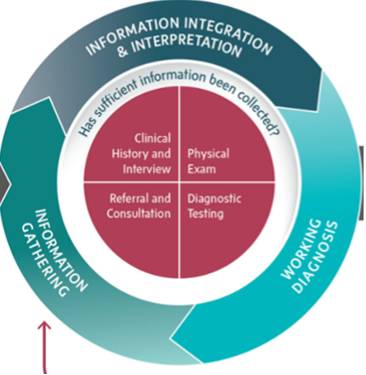

The task of diagnosis is a complex, iterative classification process that involves gathering and integrating clinical information, interpreting this information and building a working diagnosis, and consistently re-evaluating the diagnosis as new information becomes available.1 Throughout this process, clinicians are called upon to make decisions that may substantially change the course of a patient's diagnostic journey and overall health. During this process (Figure 1), errors can occur, leading to a diagnosis that is missed, wrong, or delayed.2 While diagnostic error has a range of causes, one important class of causes is that of cognitive error, when a clinician engages in faulty reasoning.

Figure 1. Clinical Reasoning in the Clinician-Patient Encounter (modified figure)

Source: Reproduced with permission from the National Academies Press.1

Cognitive Errors

Cognitive errors are those in which the problem is caused by inadequate knowledge, faulty data gathering, inaccurate clinical reasoning, or faulty verification in the mind of the individual provider. Failure to elicit a complete and accurate history or identify abnormalities in the physical examination are examples of diagnostic error. Misinterpreting information from laboratory tests or medical imaging are errors as well.

Dual Process Model of Clinical Thinking: Clinical reasoning involves two different cognitive processes: Type 1 processes are automatic and unconscious, in contrast to Type 2 processes, which involve conscious, rational consideration. Both Type 1 and Type 2 processing are error prone and can result in diagnostic errors.

- Type 1 processing is a fast, intuitive process where new information is associated with similar examples in one’s memory.3 In everyday practice, clinicians easily recognize the typical presentations of common conditions, and thus much of diagnosis involves initial Type 1 processing. An example of Type 1 processing in medicine is making a “doorway diagnosis” – rapidly recognizing a typical array of signs and symptoms that point to a classic presentation of a disease.

- Type 2 processing is slow, deliberate consideration, which is effortful. Type 2 processing overrides Type 1 processing when providers pause to reflect on their processing in a systematic way or when a patient presentation is unusual or atypical. The computational nature of Type 2 processing can tax limited working memory and contribute to medical errors.4 An example of Type 2 processing in medicine is writing an assessment wherein the data supporting or refuting each of the differential diagnoses is evaluated.

Diagnostic decisions must often be made with suboptimal amounts of information (ranging from scarcity to overload), under time constraints, under emotional stress, and through physical and mental fatigue on the part of the clinician. The concept of heuristics is central to understanding how clinicians use information to arrive at decisions, particularly under such suboptimal conditions. Heuristics are broadly defined as cognitive processes that aid in complex decision-making. They may be viewed as "fast and frugal” cognitive processes that prioritize subsets of the available information to reach a decision, while minimizing time and cognitive effort.5

While Type 1 heuristics can be useful tools, they can also produce incorrect conclusions that, if not "double-checked", can ultimately lead to suboptimal decision-making. In such cases where heuristics lead to faulty reasoning, they are considered cognitive biases. Within the specific context of clinical decision-making, many cognitive biases have been described,4,7,8 and evidence suggests that these biases are linked to medical errors.3,9,10 Notable biases include: availability bias, in which individuals prioritize information that comes most readily to mind, often due to recent experience; anchoring bias, wherein clinicians may assign greater importance than warranted to a particular piece of information that emerges early in the diagnostic process and continue to base decisions on this anchor despite discovery of new information; confirmation bias, wherein clinicians may misinterpret and/or even direct the diagnostic work-up to support a pre-existing theory despite contradictory evidence; and premature closure, in which a working diagnosis is prematurely considered to be a final diagnosis and further diagnostic work-up is not pursued. We enumerate many of these cognitive biases in Table 1, along with definitions and links to PSNet WebM&M cases and commentaries in which these biases affected clinical decisions.

Table 1. Common Cognitive Biases, Definitions, and Examples

|

Cognitive Bias/Heuristic |

Definition |

PSNet WebM&Ms |

|

Anchoring bias / Premature closure

|

The common cognitive trap of allowing first impressions to exert undue influence on the diagnostic process. Clinicians often latch onto features of a patient's presentation that suggest a specific diagnosis. In some cases, subsequent developments in the patient's course will prove inconsistent with the first impression. Anchoring bias refers to the tendency to hold on to the initial diagnosis, even in the face of disconfirming evidence. |

Do Not Miss Sepsis Needles in Viral Haystacks! From Possible to Probable to Sure to Wrong—Premature Closure and Anchoring in a Complicated Case Anemia and Delayed Colon Cancer Diagnosis |

|

Ascertainment bias |

When a healthcare provider’s thinking is shaped by prior expectations, for example, seeing what one expects to find |

|

|

Automation complacency |

Overconfidence in an automated machine or process |

Don’t Wait to Collect an Accurate Weight: A Case of Subtherapeutic Insulin Therapy |

|

The tendency to assume when judging probabilities or predicting outcomes that the first possibility that comes to mind (ie, the most cognitively "available" possibility) is also the most likely possibility. Personal experience can also trigger availability bias, as when the diagnosis underlying a recent patient's presentation immediately comes to mind when any subsequent patient presents with similar symptoms. Particularly memorable cases may similarly exert undue influence in shaping diagnostic impressions. |

Hindsight is 20/20: Thrombolytics for Alcohol Intoxication Missed Diagnosis of Addison’s Disease in Adolescent Presenting with Fatigue |

|

|

Blind obedience |

Placing undue reliance on test results or "expert" opinion

|

|

|

Commission bias |

The tendency, in the midst of uncertainty to err on the side of action regardless of evidence |

|

|

|

The tendency to focus on evidence that supports a working hypothesis, such as a diagnosis in clinical medicine, rather than to look for evidence that refutes it or provides greater support to an alternative diagnosis; “tunnel vision” |

Medication Mix-Up Leads to Patient Death A Missed Bowel Perforation - the Importance of Diagnostic Reasoning |

|

Diagnostic momentum |

A diagnosis is given to a patient without adequate evidence and then perpetuated across various care settings and providers |

|

|

Framing effects

|

Diagnostic decision-making unduly biased by subtle cues and collateral information

|

|

|

Knowing the outcome of an event influences the perception and memory of what actually might have occurred; in analyzing diagnostic errors, this can compromise learning by creating illusions of the participants' cognitive abilities, with potential for both underestimation and overestimation of what the participants knew (or could have known) |

||

|

Implicit bias

|

Attitudes or associations that unconsciously influence behavior, interactions, and/or decision-making; can be based on race, age, gender identity, sexual orientation, socioeconomic status, disability, weight, mental health, or other characteristics |

Paroxysmal Supraventricular Tachycardia Masquerading as Panic Attacks *Gender bias Implicit Biases, Interprofessional Communication, and Power Dynamics *Gender bias Diagnostic Delay in the Emergency Department *Obesity bias Uterine Artery Injury during Cesarean Delivery Leads to Cardiac Arrests and Emergency Hysterectomy *Racial bias False Assumptions Result in a Missed Pneumothorax after Bronchoscopy with Transbronchial Biopsy. *Racial bias |

|

The tendency to miss important events or data in an intense or complex situation because of competing attentional tasks and divided focus (the Gorilla Example). Individuals experiencing inattentional blindness unknowingly orient themselves toward and process information from only one part of their environment, while excluding others which can contribute to task omissions and missed signals, such as incorrect medication administration. |

Medication Mix-Up Leads to Patient Death

|

|

|

Overconfidence |

The tendency to think one knows more than one does, or that our assumptions, conclusions, and actions are correct |

|

|

Representativeness restraint |

The healthcare provider looks for prototypical manifestations of disease (pattern recognition) and fails to consider atypical variants |

Endometriosis: A Common and Commonly Missed and Delayed Diagnosis |

|

Sunk costs phenomenon |

The more the healthcare provider invests in a particular diagnosis, the less likely they may be to release it and consider alternatives |

A Missed Bowel Perforation - the Importance of Diagnostic Reasoning |

Strategies to Optimize Clinical Reasoning

Diminish the Effects of Cognitive Bias

The prevention or correction of cognitive bias requires recognition of this phenomenon and its role in diagnostic error. For this reason, it is critical to educate medical providers to recognize cognitive bias in time to prevent harm. Systematic review of educational interventions to reduce cognitive error demonstrates some improvement in diagnostic accuracy, but there is limited data available on interventions in the clinical setting.11 The employment of cognitive reasoning tools, a set of interventions that includes checklists, mindful practice, diagnostic “time-outs,” cognitive forcing strategies, second opinions, and clinical decision support may provide modest improvements in diagnostic accuracy when employed in the workplace.12,13 Cognitive strategies to reduce bias include forced consideration of alternative diagnoses and deliberate mindfulness and metacognition about the diagnostic process.14 Certain strategies may be useful for combating specific biases. For example, explicit acknowledgement of diagnostic uncertainty can be an antidote to overconfidence and anchoring biases. Seeking feedback on a working diagnosis with a colleague or getting a second opinion on a case can help reduce premature closure, availability bias, and confirmation bias.15 Finally, taking accountability for a diagnosis can combat diagnostic momentum and framing effect.16 Table 2 reviews some common strategies that can aid in the recognition of cognitive bias and examples.

Type 2 processing techniques to reduce cognitive bias are time and labor intensive, so they cannot be reasonably employed in every diagnostic situation. For this reason, it is a priority to target situations at high risk for cognitive bias and alert diagnosticians to employ risk-reduction strategies. An example of a high risk situation is during handoffs, where care of a patient is transitioned between care teams, and diagnostic momentum and framing may be at play.13 Decisions that are emotionally charged are known to be more swayed by Type 1 processing so awareness of one’s emotional relationship to a patient is important for examination of affective bias.17 Forced “slowing down” strategies, such as a planned time-out in the operating room or a diagnostic time-out in high cognitive load situations, such as a busy Emergency Room shift, can be a prompt to review diagnostic reasoning for any cognitive biases.1

Calibration

Calibration refers to the relationship between the accuracy of a diagnosis and the clinicians’ confidence in that diagnosis.18,19 Miscalibration can lead to a tendency towards one of two types of error: under confidence in a correct diagnosis can lead to over testing and low-value care, while over confidence in an inaccurate diagnosis may prevent Type 2 processing from being engaged to critically examine flaws in the diagnostic reasoning process.20 Clinicians do not routinely receive feedback about delayed, missed, or incorrect diagnoses, and lack of systematic feedback poses challenges to diagnostic calibration.21,22 In fact, most healthcare organizations lack any systematic mechanism for detecting and reporting on diagnostic error.15 Building better systems to detect and report on diagnostic errors is foundational to promoting appropriate diagnostic calibration among clinicians and is addressed in the primer "Systems-Based Approaches to Diagnostic Excellence."

Accurate recognition of and timely communication about diagnostic uncertainty can prompt clinicians to engage Type 2 analytic reasoning about diagnosis. Interventions have included the development of an “uncertain diagnosis” label and the application of natural language processing and machine learning to identify linguistic patterns in clinical notes that may signal the presence of diagnostic uncertainty in a case.23,24

Table 2: Strategies to Strengthen Diagnostic Accuracy

|

Strategy |

Examples |

|

Utilizing clinical prediction rules in diagnosis helps correct for biased clinician estimates of base rates and pre- or post-test probability |

|

|

Engage in a deliberate, team-based “time-out” whereby the leading diagnostic is critically reappraised |

|

|

Using a diagnostic reminder system to expand differential diagnosis |

|

|

Explicit acknowledgement of uncertainty16 |

Employ a label indicating that diagnostic uncertainty is present, either in handoffs or in patient charts; utilize technology (artificial intelligence or machine learning) to identify when diagnostic uncertainty is present 23,24 |

|

Forced consideration of alternative diagnoses17 |

The creation of a differential diagnosis; consider the “worst case scenario” diagnosis; consider a predefined list of “do not miss” diagnoses for a given clinical presentation |

|

Second opinion/Peer consult 16 |

Seeking feedback on diagnoses may combat premature closure, anchoring, or diagnostic momentum |

|

Taking accountability17 |

When diagnosticians take personal responsibility for a diagnosis, they are more likely to reflect critically upon it |

Conclusion

Although daunting in scope and severity, diagnostic error can be addressed through structural, environmental, educational, and individual interventions. More work is needed to strengthen tools to detect and prevent diagnostic error and in the creation of innovative strategies to foster diagnostic excellence.

___

Authors

Roslyn Seitz, MSN, MPH

Associate Editor, AHRQ’s Patient Safety Network (PSNet)

Health Sciences Assistant Clinical Professor

University of California, Davis

[email protected]

Kristen Johnson, MD, MSc

Pediatric Hospital Medicine Attending, Children’s National Hospital

Assistant Professor, The George Washington University School of Medicine & Health Sciences

[email protected]

Paarth Kapadia, MD

Resident Physician

University of Pittsburgh Medical Center

[email protected]

Mark L Graber, MD, FACP

Founder, Community Improving Diagnosis in Medicine (CIDM)

Professor Emeritus, Stony Brook University, NY

[email protected]

This primer was funded under contract number 75Q80119C00004 from the Agency for Healthcare Research and Quality (AHRQ), U.S. Department of Health and Human Services. The authors are solely responsible for this report’s contents, findings, and conclusions, which do not necessarily represent the views of AHRQ. Readers should not interpret any statement in this report as an official position of AHRQ or of the U.S. Department of Health and Human Services. None of the authors has any affiliation or financial involvement that conflicts with the material presented in this report.

Publication date: 6/25/2025

___

References

1. Balogh EP, Miller BT, Ball JR, eds. Committee on Diagnostic Error in Health Care, Board on Health Care Services, Institute of Medicine, The National Academies of Sciences, Engineering, and Medicine. Improving Diagnosis in Health Care. National Academies Press; 2015.

2. Newman-Toker DE, Pronovost PJ. Diagnostic errors—the next frontier for patient safety. JAMA. 2009;301(10):1060-1062.

3. Norman GR, Monteiro SD, Sherbino J, et al. The causes of errors in clinical reasoning: cognitive biases, knowledge deficits, and dual process thinking. Acad Med. 2017;92(1):23-30.

4. Saposnik G, Redelmeier D, Ruff CC, et al. Cognitive biases associated with medical decisions: a systematic review. BMC Med Inform Decis Mak. 2016;16(1):138.

5. Gigerenzer G, Hoffrage U, Kleinbölting H. Probabilistic mental models: a Brunswikian theory of confidence. Psychol Rev. 1991;98(4):506-528.

6. Kahneman D. Thinking, Fast and Slow. Farrar, Straus and Giroux; 2011.

7. Loncharich MF, Robbins RC, Durning SJ, et al. Cognitive biases in internal medicine: a scoping review. Diagnosis (Berl). 2023;10(3):205-214.

8. Watari T, Tokuda Y, Amano Y, et al. Cognitive bias and diagnostic errors among physicians in Japan: a self-reflection survey. Int J Environ Res Public Health. 2022;19(8):4645.

9. van den Berge K, Mamede S. Cognitive diagnostic error in internal medicine. Eur J Intern Med. 2013;24(6):525-529.

10. Mamede S, van Gog T, van den Berge K, et al. Effect of availability bias and reflective reasoning on diagnostic accuracy among internal medicine residents. JAMA. 2010;304(11):1198-1203.

11. Tung A, Melchiorre M. Debiasing and educational interventions in medical diagnosis: a systematic review. Univ Toro Med J. 2023;(100(1):48-57.

12. Staal J, Hooftman J, Gunput STG, et al. Effect on diagnostic accuracy of cognitive reasoning tools for the workplace setting: systematic review and meta-analysis. BMJ Qual Saf. 2022;31(12):899-910.

13. Yale S, Cohen S, Bordini BJ. Diagnostic time-outs to improve diagnosis. Crit Care Clin. 2022;38(2):185-194.

14. Croskerry P. From mindless to mindful practice--cognitive bias and clinical decision making. N Engl J Med. 2013;368(26):2445-2448.

15. Freund Y, Goulet H, Leblanc J, et al. Effect of systematic physician cross-checking on reducing adverse events in the emergency department: the CHARMED cluster randomized trial. JAMA Intern Med. 2018;178(6):812-819.

16. Richards JB, Hayes MM, and Schwartzstein RM. Teaching clinical reasoning and critical thinking - ClinicalKey. Chest. 2020;158(4):1617-1628.

17. Croskerry P, Singhal G, Mamede S. Cognitive debiasing 2: impediments to and strategies for change. BMJ Qual Saf. 2013;22(suppl 2):ii65-ii72.

18. Cifu AS. Diagnostic errors and diagnostic calibration. JAMA. 2017;318(10):905-906.

19. Meyer AND, Payne VL, Meeks DW, et al. Physicians’ diagnostic accuracy, confidence, and resource requests: a vignette study. JAMA Intern Med. 2013;173(21):1952-1958.

20. Berner ES, Graber ML. Overconfidence as a cause of diagnostic error in medicine. Am J Med. 2008;121(5 suppl):s2-s23.

21. Schiff GD, Hasan O, Kim S, et al. Diagnostic error in medicine: analysis of 583 physician-reported errors. Arch Intern Med. 2009;169(20):1881-1887.

22. Khazen M, Mirica M, Carlile N, et al. Developing a framework and electronic tool for communicating diagnostic uncertainty in primary care: a qualitative study. JAMA Netw Open. 2023;6(3):e232218.

23. Marshall TL, Nickels LC, Brady PW, et al. Developing a machine learning model to detect diagnostic uncertainty in clinical documentation. J Hosp Med. 2023;18(5):405-412.

24. Ipsaro AJ, Patel SJ, Warner DC, et al. Declaring uncertainty: using quality improvement methods to change the conversation of diagnosis. Hosp Pediatr. 2021;11(4):334-341.

25. Graber ML, Kissam S, Payne VL, et al. Cognitive interventions to reduce diagnostic error: a narrative review. BMJ Qual Saf. 2012;21(7):535-557.