Editor's Pick: AI shows promise in improving diagnostic and management quality in new urgent care-based study

"Comparison of Initial Artificial Intelligence (AI) and Final Physician Recommendations in AI-Assisted Virtual Urgent Care Visits"

Zeltzer D, Kugler Z, Hayat L, Brufman T, Ilan Ber R, Leibovich K, Beer T, Frank I, Shaul R, Goldzweig C, Pevnick J.

Annals of Internal Medicine

April 4, 2025

What's the point?

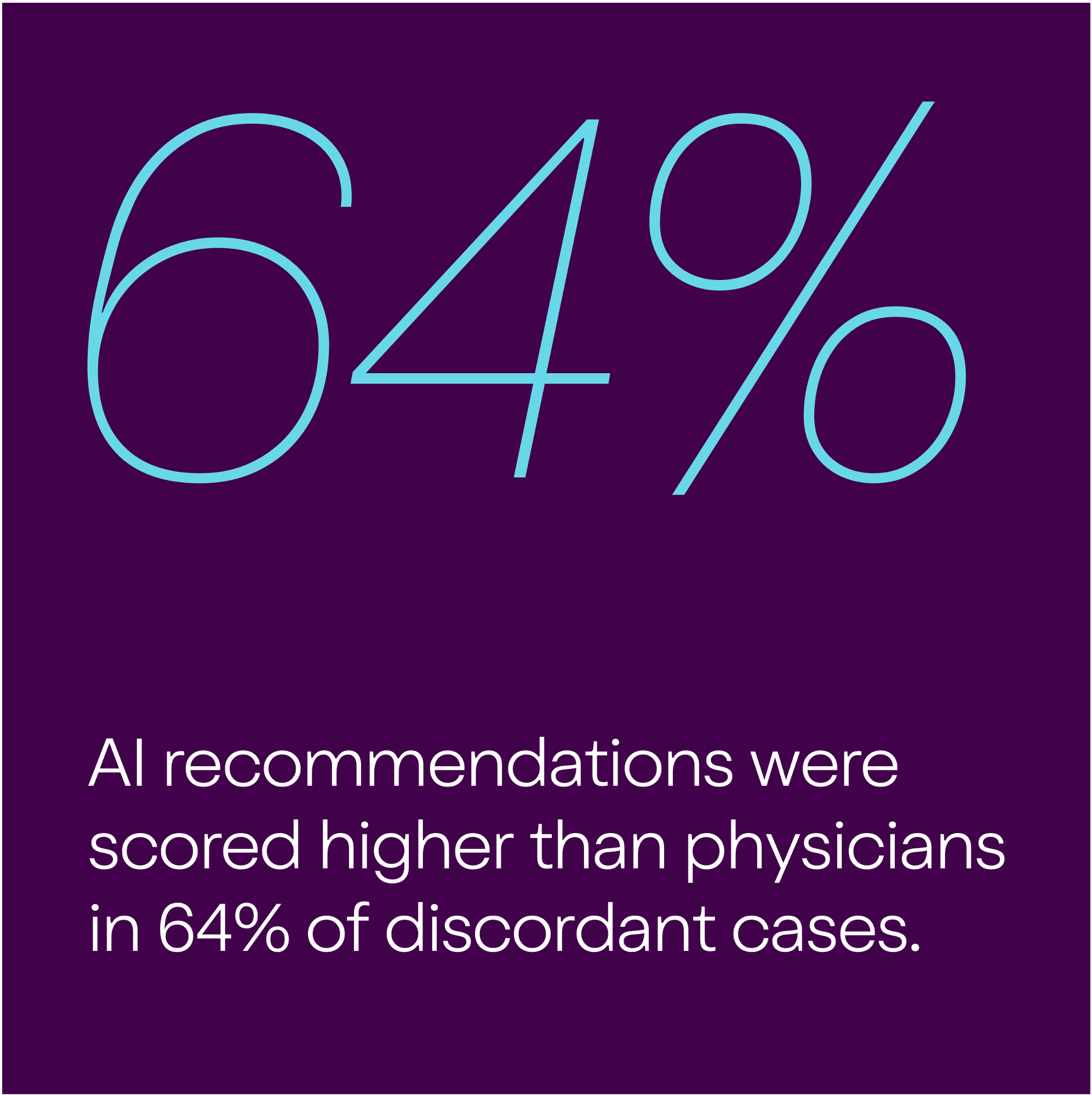

Researchers are exploring how artificial intelligence (AI) could help doctors make better diagnoses, but they need more data to understand its real impact. This retrospective cohort study from Cedars-Sinai’s urgent care clinic in Los Angeles looked at 461 patient visits where an AI program provided initial assessments before patients saw a physician. Researchers compared the AI’s diagnostic and treatment recommendations to the physician’s final decisions. They matched in 56.8% of cases; in the remaining cases, the AI’s recommendations were rated higher 64% of the time, while physicians scored higher in 36%.

The Bottom Line: AI was more consistent in following treatment guidelines, while physicians performed better when patient symptoms changed over time or required a physical exam.

Why does this matter?

This is one of the first real-world studies comparing AI and physicians on both diagnosis and treatment for common conditions. It focused on meaningful outcomes like appropriate care and potential harm.

While it doesn’t suggest AI can replace doctors, it shows where each may perform better. It’s worth noting the study was funded by the AI’s developer, and some scoring differences may not reflect what truly matters to patients—since more than one reasonable approach can still count as a disagreement.

Who does this impact?

This study is relevant to clinicians, patients and caregivers, learners, and healthcare administrators and policymakers. Specificially, clinicians can use this evidence to support their interests in AI applications on the front lines of care. Policymakers and administrators can use the data to generate buy-in for AI implementation when clinicians are skeptical. Overall, the study highlights how embedded AI can support care by surfacing key information—like past labs or allergies—and helping align treatment decisions, pointing to a future where AI works alongside providers.

CODEX Editor

Anjana Sharma, MD, MAS

Learning Hub Faculty Lead, UCSF CODEX

Associate Professor, UCSF Department of Family and Community Medicine

Join the Conversation

We’d love to hear your take—join the conversation with us on LinkedIn or Bluesky and share your thoughts!

About Editor's Picks

Curated by the UCSF CODEX team, each Editor’s Pick features a standout study or article that moves the conversation on diagnostic excellence forward. These pieces offer meaningful, patient-centered insights, use innovative approaches, and speak to the needs of patients, clinicians, researchers, and decision-makers alike. All are selected from respected journals or outlets for their rigor and real-world relevance.